INTRODUCTION

In this lesson, different cloud types will be compared to identify which type is best suited to meet an organization’s business needs. Technical details of new and/or previously discussed cloud components and features will also be explained.

Cloud service providers will often adopt technical standards to ensure its customer base of its continued efforts to protect data and build conforming system architecture. When choosing to adopt cloud services, the technical aspects of cloud computing along with the business need will guide the proper selection of cloud service providers. While considering business needs, technical aspects and standardization, one must also consider the technical challenges involved with cloud computing.

This lesson will discuss some of the challenges that are presented when migrating to the cloud.

LESSON OBJECTIVES

- Describe the technical differences between private and public types of clouds.

- Describe the following techniques for cloud computing deployment: Networking, Automation and Self Service, Federation, and the Role of Standardization.

- Explain technical challenges and risk for cloud computing and methods to mitigate them.

- Explain the impact of cloud computing on application architecture and the application development process.

Public and Private Clouds

From the four deployment models – public, private, hybrid, and community – we are going to narrow our focus on the two most popular models, public and private. Here the two cloud types will be approached from a more technical perspective. To get started, first review the following web resource (required reading):

Difference Between Public Cloud and Private Cloud

A public cloud can be accessed from the Internet by the general public. A service provider usually provides public cloud services for free or a pay-per-usage rate. The public cloud is the most standard model of all the various cloud types where users typically have no physical or programmatic access to the cloud infrastructure.

Google and Go Daddy hosting are examples of public cloud types. From a business point of view, the major variables impacting public cloud offerings are security, ownership, backup and recovery, and vendor lock-in.

Private Cloud Benefits

A private cloud can be accessed from the Internet by authorized users belonging to a distinct company or organization. The cloud infrastructure and components are all owned by the company or organization. Unlike the public cloud, private cloud deployments are often obscured and restricted from the general public. The types of businesses that opt for this type of cloud deployment have sensitive data that must be secured. One of the primary reasons that organizations adopt a private cloud approach is the ability to centrally control access to the cloud, files, folders, and other data within the cloud infrastructure. Private cloud deployments allow organizations to implement their own user authentication solution (e.g., LDAP and Microsoft Active Directory). Private cloud services provide for greater security, compliance, accountability, and systems control. However, the costs of private cloud deployments are greater, and provisioning of system resources may be limited based on the available resource pool of the provider.

When discussing private cloud deployments, a distinction has to be made between local and remote deployments.

- Local – The organization or company maintains ownership of cloud services.

- Remote – Cloud services are managed by third-party vendors, but systems are partitioned from resource pools and used by individual organizations.

There are many ways to describe local and remote private cloud deployments. Review the following online resource, Clarifying Private Cloud Computing, for an alternate description.

Network Models for Cloud Deployment

Cloud topologies are principally comprised of three parts:

- Front-End – network software and hardware that allows users to connect to the application

- Back-End – network software and hardware that allows user information to be processed and stored

- Network – refers to the structure built on layers 2 and 3 of the OSI model (data link and network layers); governs communication between end devices

The network is one of the newest components to be virtualized. Layer 3 virtualization allows each cloud to be represented as a separate network with its own unique IP addresses and attributes.

A cloud topology facilitates the deployment of virtual machines to any server in the network. The protocol used to implement this virtualization is Virtual Extensible Local Area Network (VXLAN). VXLAN makes it possible to extend the IP address space, which in turn enables the scaling of networked virtual machines.

Cloud environments typically use Software Defined Networking (SDN) to establish connections between cloud services. One of the most exciting applications of cloud solutions is cloud computing, which puts the vast resources of dozens or even hundreds of nodes together temporarily for the purpose of solving a problem or processing a dataset.

Cloud computing leverages big data, parallel processing, and sophisticated algorithms to solve problems in science and engineering 100 times faster than was previously possible. Read the online resource, Cloud Computing Architecture to better understand cloud computing in action.

Once the architecture is developed, models are used to illustrate and explain how a process or product is supposed to work. There are three types of networking models used to describe the transition to cloud computing:

- Silo Model

- Virtualized Resource Model

- Cloud Computing Model

As these models are examined, visualize the transition of business computing from using a traditional data center, a standalone facility, to a virtualized distributed facility, and then to a cloud computing environment.

Silo Model

Data centers have traditionally been places where a massive amount of hardware and software is brought together and made available to clients. The silo model is the first and the least functional model. Note that, literally, a silo is a cylindrical building situated on a farm, as seen in Figure 1, used to store grain or other materials.

Figure 1: Silo

The silo model is used to depict the case where all of a data center’s physical resources are housed at the same location. All resources are compartmentalized or isolated. This concept is further explained in the online resource, The Danger of Cloud Silos. Silo model implementations are inefficient with respect to hardware resource utilization.

Virtualized Resource Model

To address the wastefulness of the silo model, the virtualized resource model was developed as an extension of the silo model. The virtualized resource model is less static, meaning it can be used to duplicate or virtualize network resources, like servers. The silo model does not allow for virtualization; therefore, if additional servers are needed, they have to be physically inserted into the environment. In the virtualized resource model, a hypervisor is used to create a software copy of the resource, as illustrated conceptually in Figure 2.

Figure 2: Visual Representation of Virtualization

Cloud Computing Model

The last and the most functional network model is the cloud computing model. The cloud computing model extends the virtualized resource model by allowing data center resources to be accessible from the Internet. This network model, illustrated in Figure 3, is location independent, on-demand, resource redundant, and optimized for high performance.

Figure 3: Conceptual Depiction of Cloud Computing

To realize cloud computing, there is still need for hardware, but the supporting software infrastructure has been extended to encompass all resources that can be virtualized. One of the major players in data center technologies is Cisco Systems. Read their Case Study on the evolution of data centers (required reading).

Automation and Self-Service

One of the main features of cloud computing is its ability to be rapidly provisioned. To facilitate this, services are written to automate the operational functions previously carried out by humans. Automating a process or task saves time. When deploying cloud services, automation techniques ease the migration by a customer to a cloud environment. These techniques provide the ability to prescriptively apply memory, storage, and configuration options at the click of a button. Automation provides:

- Compliance with a standard

- Improved reliability and response time

- Better optimization

One of the goals in cloud computing is to automate service requests and to optimize the provisioning of resources.

Automation techniques are also used to authenticate users trying to access cloud services. Self-service access, therefore, must be controlled and secured to not allow unauthorized access. Obviously, there must be a balance between automation and security. Read the online resource, Balancing Self-Service and Managed Services in the Cloud.

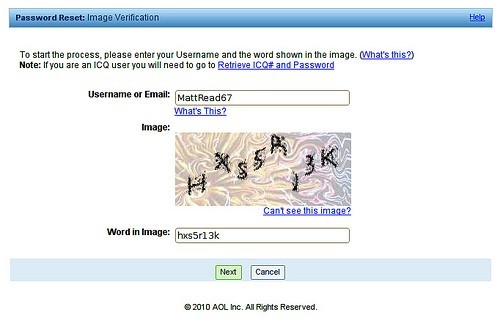

One of the main problems with automation is that computers cannot differentiate between human interaction with software and machine interaction with software. To address this issue, the Completely Automated Public Turing Test to Tell Computers and Humans Apart (CAPTCHA) program was developed, which attempts to validate whether the user is human or bot; that is, a computer or group of computers designed to use brute force algorithms to gain access to a privileged system account or Internet account (e.g., Google mail, Yahoo, AOL, etc.).

A machine cannot read the display in Figure 4; therefore, a response to this challenge must be made by a human. Do you see “HXS5R13K” in Figure 4?

Figure 4: CAPTCHA Example

Figure 4 illustrates a CAPTCHA program, which is used to distinguish between bots and humans. The concept behind CAPTCHA is simple: “what you see is what you type”. CAPTCHA uses non-optical characters, to avoid computer programs from scanning the image using optical character recognition (OCR) software. When an invalid user enters the wrong password a number of times, the CAPTCHA program will launch. CAPTCHA is also used for account creation to help verify whether the user is human. Cloud providers offering self-service use the CAPTCHA program to help ensure human access.

Other benefits of automation and self-service include:

- Avoiding complex setup requirements for user/customer.

- More effective implementation of standardization

- Resources are utilized more efficiently (e.g., when a server is not in use, it can be automatically powered off)

The challenges to automation are:

- Security – data is vulnerable as it traverses the network

- Compatibility – applications must be updated periodically to stay current

- Complexity – transformation of data and infrastructure from one environment to another is harder to encode as complexity increases

Federated Cloud Services

As discussed above, a time may come where a cloud provider can no longer meet the needs of its customers. Federation allows cloud service providers to purchase additional cloud service capabilities from other cloud vendors to meet their own customer needs.

Likewise, a cloud provider may also have an extra cloud data center (DC) in a different geographic location which can be used to provide additional flexibility. Content Delivery Networks (CDNs) are used over large geographic areas to reduce the number of hops required to obtain data. A cloud service provider may set up CDNs in major cities located on each continent (excluding Antarctica). These nodes will employ a caching mechanism which will store data from a primary node on multiple CDNs in the service provider network. A customer requesting service can then access the CDN network instead of traversing additional, costly, time-consuming hops.

CDNs provide a better quality of service for both the cloud service provider and the customer. High availability and load balancing are common reasons for federated services. Load balancers (LBs) are network appliances that handle high network load during peak hours. They monitor data traffic for the global site and optimize network management. Additionally, high availability ensures that if a service or server is not functioning, it has a redundant or mirrored system to fall back on.

For emphasis, federated services allow for trusted connections to be established between two disjoint or different entities. These entities can then transmit data to and from each other, thereby creating a larger resource pool to meet customer demand or business needs.

CloudSwitch, for example, is a Verizon software solution that can bridge or make a federated connection between an internal data center that has reached capacity and a cloud DC.

Maintaining security and adhering to compliance standards is critical when data is being transmitted from one entity to another. Each cloud service provider may operate using different standards and security practices, so how can a cloud service provider offer a standard of security in a federated environment? The Service Level Agreement (SLA) provides members in a federated environment audit policies, network topologies, locations, and the security standards being implemented at each site. The SLA is used, among other things, to inform each cloud provider of how security is handled within the respective provider environments comprising the federation.